Does advertising still work?

On one hand…

A new report of the IPA databank shows that ads winning creativity awards are showing the lowest correlation to sales in the 24 year history of the database.

Marc Pritchard, chief marketer at Procter has publicly questioned if advertising is worth it as there are other ways to deliver brand content.

Many marketers are led to believe their advertising produced no effect because their brand KPIs from brand trackers and surveys triggered by ad exposure showed no effect.

On the other hand…

The only way to know if advertising is working is via measurement and analysis. So when advertising appears to fail is it the advertising or the measurement system that is failing?

I work a lot with digital data and user level analysis…location data to get at store visitation, TV data matched at user level to sales or digital conversions, digital A/B testing, multi-touch attribution (MTA). I have rarely seen ad performance evaluation that did not show at least some lift.

And this explanation that measurement is the problem makes sense relative to learnings from my own white paper research. Every brand has its own high and medium propensity buyers where a subset is close to an upcoming purchase who will exhibit 5-16 TIMES the Return on ad spend. So even if the creative is not very artistic, advertising will work against these segments because it is reminding consumers of what they already know…and tipping the scales vs. other brands they are favorable to. But this effect can get lost in marketing mix models and small sample surveys, especially for smaller brands.

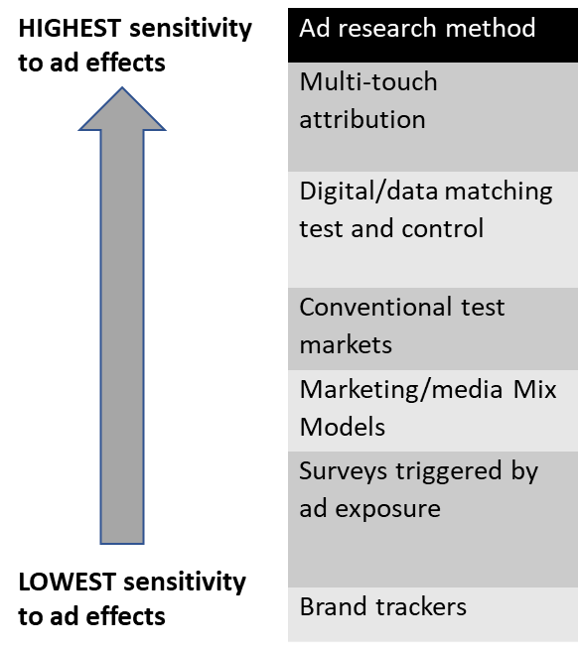

Consider the following table of methods used to evaluate if advertising is working. I have rank-ordered the methods based on my own expectation of sensitivity of the method to pick up advertising effects on sales if there was a bake-off of these methods against the same set of campaigns (or synthetic data). If you are using a method to evaluate advertising effectiveness that is close to the bottom of this list, the problem might be your measurement system.

| Sensitivity to ad effects | Ad research method | rationale |

| Highest | Multi-touch attribution | User level data often at a scale of millions of records. Finds ad impact even for smaller investment media tactics, segment by segment. Always finds which tactics work better. |

| Digital/data matching test and control | User level matching of exposed and control cells with precise matching. Often uses millions of records. Can be used to find digital advertising or traditional advertising effects when user level matching of, say TV and frequent shopper data are available. True experimental design considered a gold standard for incrementality. | |

| Conventional test markets | Looks for effect of advertising on sales but often there are uncontrolled factors that lead to masking sales effects | |

| Marketing/media Mix Models | Ad effects can be swamped by promotion effects and masked by multi-collinearity. Not sensitive to granular media tactics. | |

| Surveys triggered by ad exposure | Survey measures of brand favorability have a relationship to sales that has not been sufficiently studied. Smaller sample sizes lead to insufficient statistical power to detect small percentage movements in outcome measures. | |

| Lowest | Brand trackers | On-going surveys that produce brand equity KPIs that simply do not move very much in practice. |

Want a sensitive measurement system for ad effects? The more it is tuned to behavior rather than surveys, large scale vs. small scale, user level vs. aggregated modeling or KPIs, the more sensitive your research will be and the more likely you are to find that advertising is working and for which tactics.

Consulting directly with marketers, CMOs and brand leaders have told me the most important thing for an ad effectiveness measurement system to do is to “quell the panic” that sets in when a campaign doesn’t show results in the first few weeks.” It is no coincidence these marketers worked at companies that used insensitive and slow measurement such as surveys and marketing mix models, but not attribution modeling or digital test vs. control methods.

I have noticed you don’t monetize blog.joelrubinson.net, don’t waste your traffic, you can earn additional bucks every month with new

monetization method. This is the best adsense alternative

for any type of website (they approve all websites), for more

info simply search in gooogle: murgrabia’s tools